Приветствую!

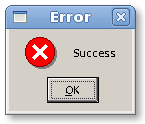

Есть oVirt 4.3, есть 12 хостов и около 100 ВМ. Хосты все разные, в основном ВМ привязаны по группам, каждая к своему хосту. Обновил хост, пытаюсь вернуть туда ВМ, но не в какую! Ошибка и всё.

Apr 25, 2019, 5:38:40 PM

Migration failed (VM: artix-puppet, Source: cluster-node15, Destination: cluster-node13).

d3c2dbc5-cdf2-4c89-9fd8-e316fd97e1f8

Apr 25, 2019, 5:35:57 PM

Migration started (VM: artix-puppet, Source: cluster-node15, Destination: cluster-node13, User: admin

node15 log

2019-04-25 14:55:57.431+0000: initiating migration

2019-04-25T14:58:46.303306Z qemu-kvm: migration_iteration_finish: Unknown ending state 2

node13 log

2019-04-25 14:44:46.940+0000: starting up libvirt version: 4.5.0, package: 10.el7_6.6 (CentOS BuildSystem <http://bugs.centos.org>, 2019-03-14-10:21:47, x86-01.bsys.centos.org), qemu version: 2.12.0qemu-kvm-ev-2.12.0-18.el7_6.3.1, kernel: 3.10.0-957.1.3.el7.x86_64, hostname: cluster-node13.office.vliga

LC_ALL=C PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin QEMU_AUDIO_DRV=spice /usr/libexec/qemu-kvm -name guest=artix-puppet,debug-threads=on -S -object secret,id=masterKey0,format=raw,file=/var/lib/libvirt/qemu/domain-19-artix-puppet/master-key.aes -machine pc-i440fx-rhel7.3.0,accel=kvm,usb=off,dump-guest-core=off -cpu Westmere,vme=on,pclmuldq=on,x2apic=on,hypervisor=on,arat=on -m size=8388608k,slots=16,maxmem=33554432k -realtime mlock=off -smp 4,maxcpus=16,sockets=16,cores=1,threads=1 -numa node,nodeid=0,cpus=0-3,mem=8192 -uuid 1a44aaa9-c660-4507-ae45-a40bf6ec4e32 -smbios 'type=1,manufacturer=oVirt,product=oVirt Node,version=7-6.1810.2.el7.centos,serial=00000000-0000-0000-0000-AC1F6B4629C0,uuid=1a44aaa9-c660-4507-ae45-a40bf6ec4e32' -no-user-config -nodefaults -chardev socket,id=charmonitor,fd=33,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=2019-04-25T17:44:46,driftfix=slew -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device piix3-usb-uhci,id=usb,bus=pci.0,addr=0x1.0x2 -device virtio-scsi-pci,id=ua-fd90aa2a-690a-4063-aead-705c1b5cafb0,bus=pci.0,addr=0x4 -device virtio-serial-pci,id=ua-ddf3198f-854e-4064-810f-08f2fc55d881,max_ports=16,bus=pci.0,addr=0x5 -drive if=none,id=drive-ua-460f6ae4-61e0-4cac-af97-f3e190bd7a4c,werror=report,rerror=report,readonly=on -device ide-cd,bus=ide.1,unit=0,drive=drive-ua-460f6ae4-61e0-4cac-af97-f3e190bd7a4c,id=ua-460f6ae4-61e0-4cac-af97-f3e190bd7a4c -drive file=/rhev/data-center/mnt/cluster-node13:_lv4/5752bd27-5e08-49b1-9a36-7795671c6f6a/images/86e50942-8bc5-4dd8-b5e4-b156246a70e9/4eb9771b-7b2a-4882-8a99-5de51e634892,format=raw,if=none,id=drive-ua-86e50942-8bc5-4dd8-b5e4-b156246a70e9,serial=86e50942-8bc5-4dd8-b5e4-b156246a70e9,werror=stop,rerror=stop,cache=none,aio=threads -device scsi-hd,bus=ua-fd90aa2a-690a-4063-aead-705c1b5cafb0.0,channel=0,scsi-id=0,lun=0,drive=drive-ua-86e50942-8bc5-4dd8-b5e4-b156246a70e9,id=ua-86e50942-8bc5-4dd8-b5e4-b156246a70e9,bootindex=1,write-cache=on -netdev tap,fds=36:38:41:42,id=hostua-375e96d6-d404-49cf-9b07-72a042c4b4e5,vhost=on,vhostfds=43:44:45:46 -device virtio-net-pci,mq=on,vectors=10,host_mtu=1500,netdev=hostua-375e96d6-d404-49cf-9b07-72a042c4b4e5,id=ua-375e96d6-d404-49cf-9b07-72a042c4b4e5,mac=00:1a:4a:16:01:53,bus=pci.0,addr=0x3 -chardev socket,id=charchannel0,fd=47,server,nowait -device virtserialport,bus=ua-ddf3198f-854e-4064-810f-08f2fc55d881.0,nr=1,chardev=charchannel0,id=channel0,name=ovirt-guest-agent.0 -chardev socket,id=charchannel1,fd=48,server,nowait -device virtserialport,bus=ua-ddf3198f-854e-4064-810f-08f2fc55d881.0,nr=2,chardev=charchannel1,id=channel1,name=org.qemu.guest_agent.0 -chardev spicevmc,id=charchannel2,name=vdagent -device virtserialport,bus=ua-ddf3198f-854e-4064-810f-08f2fc55d881.0,nr=3,chardev=charchannel2,id=channel2,name=com.redhat.spice.0 -spice port=5912,tls-port=5913,addr=10.252.252.213,x509-dir=/etc/pki/vdsm/libvirt-spice,tls-channel=main,tls-channel=display,tls-channel=inputs,tls-channel=cursor,tls-channel=playback,tls-channel=record,tls-channel=smartcard,tls-channel=usbredir,seamless-migration=on -device qxl-vga,id=ua-b1685cd1-ca38-43e3-9f8d-a364cafe6464,ram_size=67108864,vram_size=8388608,vram64_size_mb=0,vgamem_mb=16,max_outputs=1,bus=pci.0,addr=0x2 -incoming defer -device virtio-balloon-pci,id=ua-ea3bbad7-23a6-4a93-bcc9-9caddb819997,bus=pci.0,addr=0x6 -object rng-random,id=objua-dbec3a7b-d6a0-4c1b-a82d-af0cc5f3db0f,filename=/dev/urandom -device virtio-rng-pci,rng=objua-dbec3a7b-d6a0-4c1b-a82d-af0cc5f3db0f,id=ua-dbec3a7b-d6a0-4c1b-a82d-af0cc5f3db0f,bus=pci.0,addr=0x7 -sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny -msg timestamp=on

2019-04-25 14:44:46.940+0000: Domain id=19 is tainted: hook-script

2019-04-25T14:44:47.045334Z qemu-kvm: -drive file=/rhev/data-center/mnt/cluster-node13:_lv4/5752bd27-5e08-49b1-9a36-7795671c6f6a/images/86e50942-8bc5-4dd8-b5e4-b156246a70e9/4eb9771b-7b2a-4882-8a99-5de51e634892,format=raw,if=none,id=drive-ua-86e50942-8bc5-4dd8-b5e4-b156246a70e9,serial=86e50942-8bc5-4dd8-b5e4-b156246a70e9,werror=stop,rerror=stop,cache=none,aio=threads: 'serial' is deprecated, please use the corresponding option of '-device' instead

2019-04-25T14:44:47.047979Z qemu-kvm: warning: CPU(s) not present in any NUMA nodes: CPU 4 [socket-id: 4, core-id: 0, thread-id: 0], CPU 5 [socket-id: 5, core-id: 0, thread-id: 0], CPU 6 [socket-id: 6, core-id: 0, thread-id: 0], CPU 7 [socket-id: 7, core-id: 0, thread-id: 0], CPU 8 [socket-id: 8, core-id: 0, thread-id: 0], CPU 9 [socket-id: 9, core-id: 0, thread-id: 0], CPU 10 [socket-id: 10, core-id: 0, thread-id: 0], CPU 11 [socket-id: 11, core-id: 0, thread-id: 0], CPU 12 [socket-id: 12, core-id: 0, thread-id: 0], CPU 13 [socket-id: 13, core-id: 0, thread-id: 0], CPU 14 [socket-id: 14, core-id: 0, thread-id: 0], CPU 15 [socket-id: 15, core-id: 0, thread-id: 0]

2019-04-25T14:44:47.048003Z qemu-kvm: warning: All CPU(s) up to maxcpus should be described in NUMA config, ability to start up with partial NUMA mappings is obsoleted and will be removed in future

2019-04-25T14:47:36.025514Z qemu-kvm: Unknown combination of migration flags: 0

2019-04-25T14:47:36.025579Z qemu-kvm: error while loading state section id 3(ram)

2019-04-25T14:47:36.026101Z qemu-kvm: load of migration failed: Invalid argument

2019-04-25 14:47:36.407+0000: shutting down, reason=failed

cat engine.log

2019-04-25 17:38:39,555+03 INFO [org.ovirt.engine.core.vdsbroker.monitoring.VmAnalyzer] (ForkJoinPool-1-worker-7) [] VM '1a44aaa9-c660-4507-ae45-a40bf6ec4e32' was reported as Down on VDS '4dd05259-4423-4e0e-be5c-63eaaae8f6f1'(cluster-node13)

2019-04-25 17:38:39,555+03 INFO [org.ovirt.engine.core.vdsbroker.vdsbroker.DestroyVDSCommand] (ForkJoinPool-1-worker-7) [] START, DestroyVDSCommand(HostName = cluster-node13, DestroyVmVDSCommandParameters:{hostId='4dd05259-4423-4e0e-be5c-63eaaae8f6f1', vmId='1a44aaa9-c660-4507-ae45-a40bf6ec4e32', secondsToWait='0', gracefully='false', reason='', ignoreNoVm='true'}), log id: 110a93be

2019-04-25 17:38:39,889+03 INFO [org.ovirt.engine.core.vdsbroker.vdsbroker.DestroyVDSCommand] (ForkJoinPool-1-worker-7) [] FINISH, DestroyVDSCommand, return: , log id: 110a93be

2019-04-25 17:38:39,889+03 INFO [org.ovirt.engine.core.vdsbroker.monitoring.VmAnalyzer] (ForkJoinPool-1-worker-7) [] VM '1a44aaa9-c660-4507-ae45-a40bf6ec4e32'(artix-puppet) was unexpectedly detected as 'Down' on VDS '4dd05259-4423-4e0e-be5c-63eaaae8f6f1'(cluster-node13) (expected on '74561724-3d82-42be-888f-c757b3d8c3eb')

2019-04-25 17:38:39,889+03 ERROR [org.ovirt.engine.core.vdsbroker.monitoring.VmAnalyzer] (ForkJoinPool-1-worker-7) [] Migration of VM 'artix-puppet' to host 'cluster-node13' failed: VM destroyed during the startup.

2019-04-25 17:38:39,892+03 INFO [org.ovirt.engine.core.vdsbroker.monitoring.VmAnalyzer] (ForkJoinPool-1-worker-0) [] VM '1a44aaa9-c660-4507-ae45-a40bf6ec4e32'(artix-puppet) moved from 'MigratingFrom' --> 'Up'

2019-04-25 17:38:39,892+03 INFO [org.ovirt.engine.core.vdsbroker.monitoring.VmAnalyzer] (ForkJoinPool-1-worker-0) [] Adding VM '1a44aaa9-c660-4507-ae45-a40bf6ec4e32'(artix-puppet) to re-run list

2019-04-25 17:38:39,954+03 ERROR [org.ovirt.engine.core.vdsbroker.monitoring.VmsMonitoring] (ForkJoinPool-1-worker-0) [] Rerun VM '1a44aaa9-c660-4507-ae45-a40bf6ec4e32'. Called from VDS 'cluster-node15'

2019-04-25 17:38:39,983+03 INFO [org.ovirt.engine.core.vdsbroker.vdsbroker.MigrateStatusVDSCommand] (EE-ManagedThreadFactory-engine-Thread-148) [] START, MigrateStatusVDSCommand(HostName = cluster-node15, MigrateStatusVDSCommandParameters:{hostId='74561724-3d82-42be-888f-c757b3d8c3eb', vmId='1a44aaa9-c660-4507-ae45-a40bf6ec4e32'}), log id: 5f72fe10

2019-04-25 17:38:39,987+03 INFO [org.ovirt.engine.core.vdsbroker.vdsbroker.MigrateStatusVDSCommand] (EE-ManagedThreadFactory-engine-Thread-148) [] FINISH, MigrateStatusVDSCommand, return: , log id: 5f72fe10

Почему она не мигрирует? Как разобраться?